Visualising crawl data from Screaming frog

Looker Studio template to analyze your crawl data from Screaming Frog in a very actionable way.

It’s been a while now since I want to share a Google Data Studio template to visualise crawl data from Screaming frog. It’s been hard to keep it simple. The recent release of the URL Inspection API made me recreate the template from scratch and it is now available.

Building a dashboard on Data Studio is not that hard; the way you present the data is: what is the more convenient and most accessible way to present data? This question has been a real struggle as I wanted to create something simple yet useful and powerful. So if you use this template, please consider buying me a coffee to support the implication behind this.

Prepare your data from Screaming frog

- Before running the crawl, make sure to activate your Google Search Console API by heading to Configuration > API access > Google Search Console.

- In the Search Analytics tab, tick “Crawl new URLs Discovered in GSC”.

- In the Inspection URL tab, tick “Enable URL inspection”.

- Once the crawl is over, export the following:

- Internal All (directly from the interface, by hitting the “Export” button).

- Inlinks and Outlinks from Bulk exports > Links.

- Orphan pages from Reports > Orphan pages.

Add your own data to the template

- First things first, add your exported data to Google Sheets (use a spreadsheet by type of data; so you should end up with four spreadsheets: Internal All, Inlinks, Outlinks and Orphan pages).

- In Google Sheets, click File > Import > Select your file > “Replace spreadsheet”.

- Do not use different names than those added by default to your sheets.

- Eventually, you can delete the .csv files added to the root of your Google Drive after the import. Just to keep folders and files clean 🙂

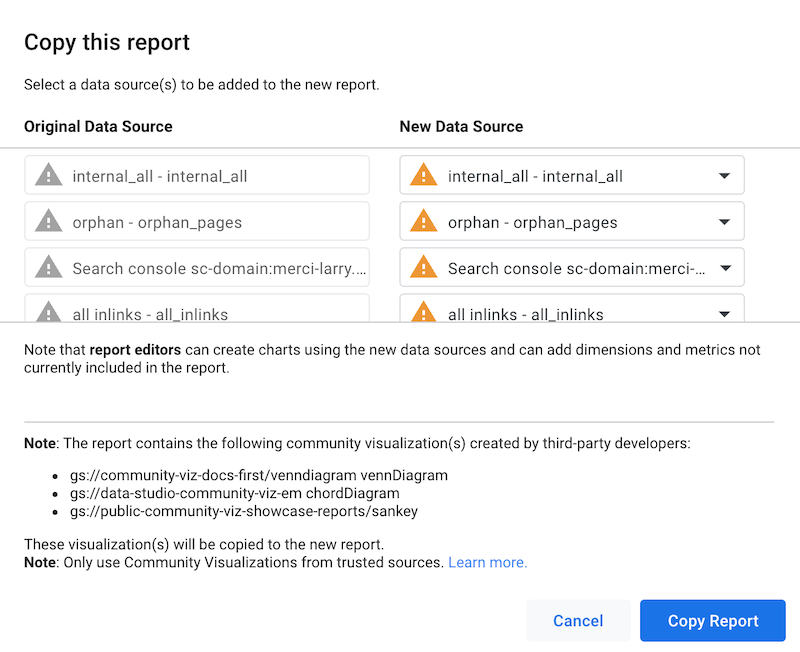

- Click on Make a copy at the top right of the template.

- Then, make sure to avoid warnings by clicking on “Copy report”.

- If you add your data sources directly here, it will override the calculated fields and blend data I created.

- You will then land on your template copy with the following message on graphs and tables: “Insufficient permissions to the underlying data set.”.

- Go to Resource > Manage added data.

- Click “Edit” on each one, select your corresponding data source and hit “Reconnect”.

- For Search Console, make sure to select the URL impression table.

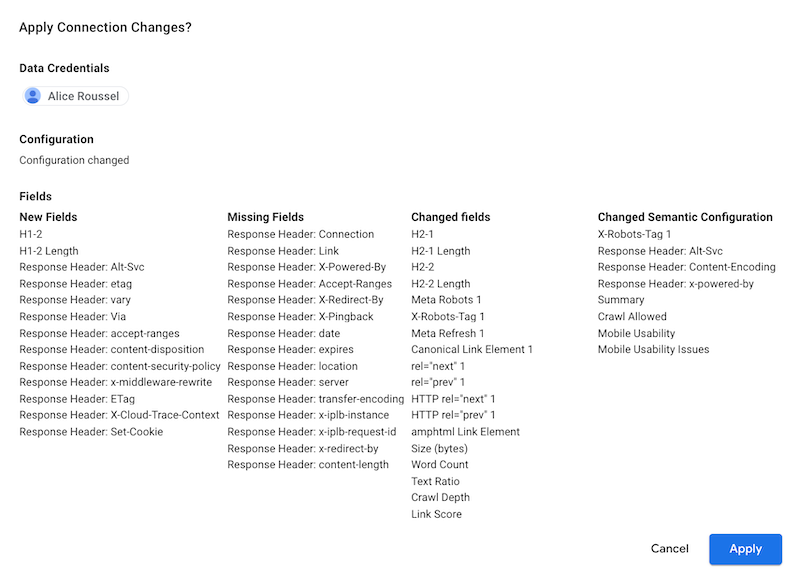

- You may see the following window when connecting your Internal all data, just click Apply:

Now you have added your own data sources. A few more steps need to be done before using the template.

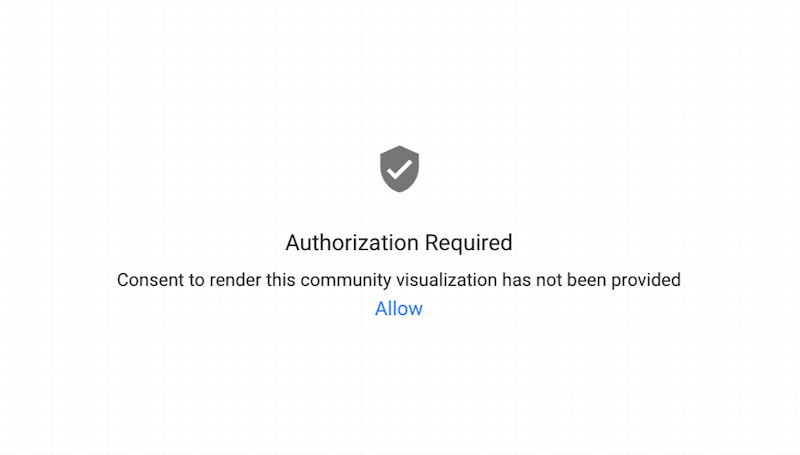

I use the three following community visualisations that you need to allow:

Last but not least, I use calculated fields including one that you need to update accordingly for both Internal All and All Inlinks. The field is named “Segmentation”.

- Internal All: on the hover on the Segmentation field, click “fx” and update the field accordingly to fit your needs.

CASE

WHEN REGEXP_MATCH(Address,'https://merci-larry.com|https://merci-larry.com/') THEN 'Homepage'

WHEN REGEXP_CONTAINS(Address,'wp-content') THEN 'Images'

WHEN REGEXP_CONTAINS(Address,'reporting') THEN 'Data Studio templates'

WHEN REGEXP_CONTAINS(Address,'articles') THEN 'Hub'

ELSE 'Articles'

END- All inlinks: you need to update both Segmentation destination and Segmentation source:

{Segmentation destination}

CASE

WHEN REGEXP_MATCH(Destination,'https://merci-larry.com|https://merci-larry.com/') THEN 'Homepage'

WHEN REGEXP_CONTAINS(Destination,'wp-content') THEN 'Images'

WHEN REGEXP_CONTAINS(Destination,'reporting') THEN 'Data Studio templates'

WHEN REGEXP_CONTAINS(Destination,'articles') THEN 'Hub'

ELSE 'Articles'

END

{Segmentation source}

CASE

WHEN REGEXP_MATCH(Source,'https://merci-larry.com|https://merci-larry.com/') THEN 'Homepage'

WHEN REGEXP_CONTAINS(Source,'wp-content') THEN 'Images'

WHEN REGEXP_CONTAINS(Source,'reporting') THEN 'Data Studio templates'

WHEN REGEXP_CONTAINS(Source,'articles') THEN 'Hub'

ELSE 'Articles'

ENDMake sure to hit Refresh data at the top right of the dashboard and you are now good to go.

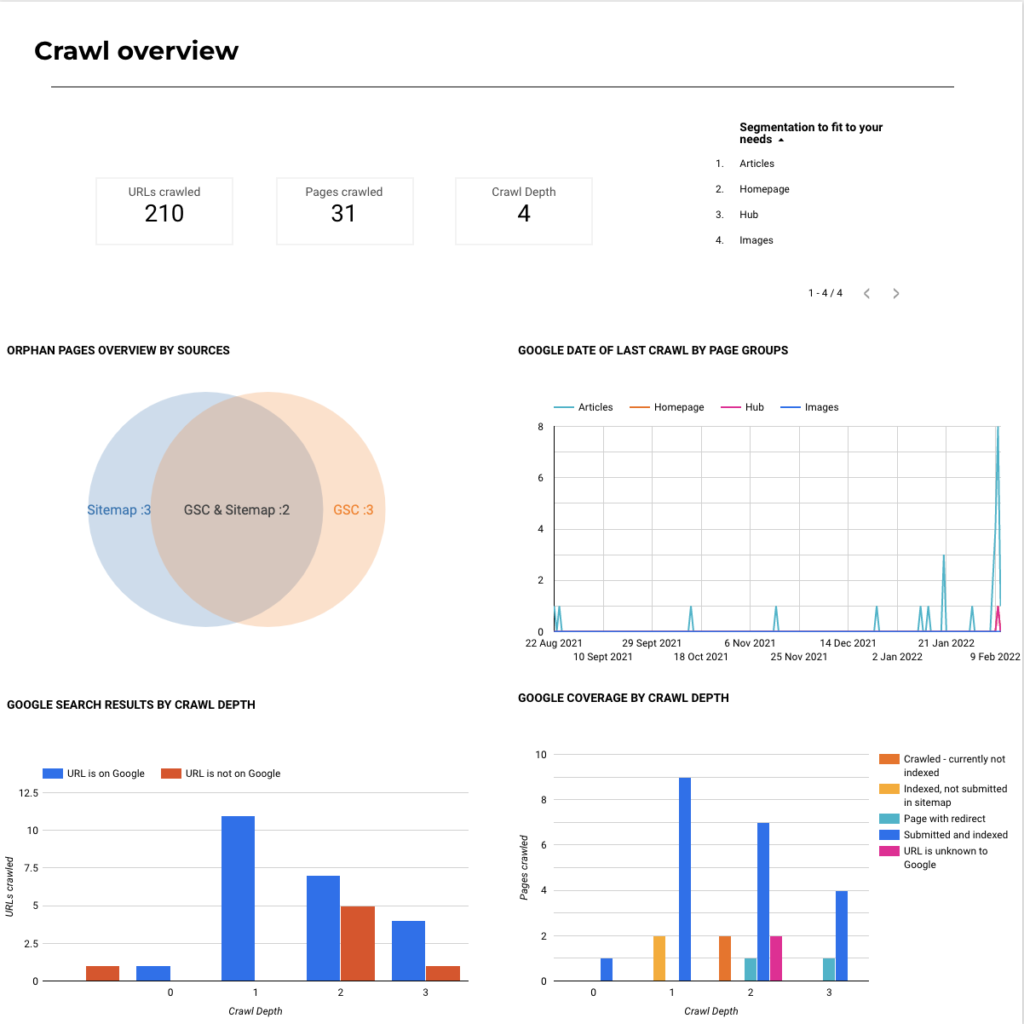

Crawl overview

List of data available:

- URLs crawled

- Pages crawled

- Crawl depth

- Segmentation overview

- Orphan pages overview by sources

- Google data of last crawl by page groups

- Google search results by crawl depth

- Google coverage by crawl depth

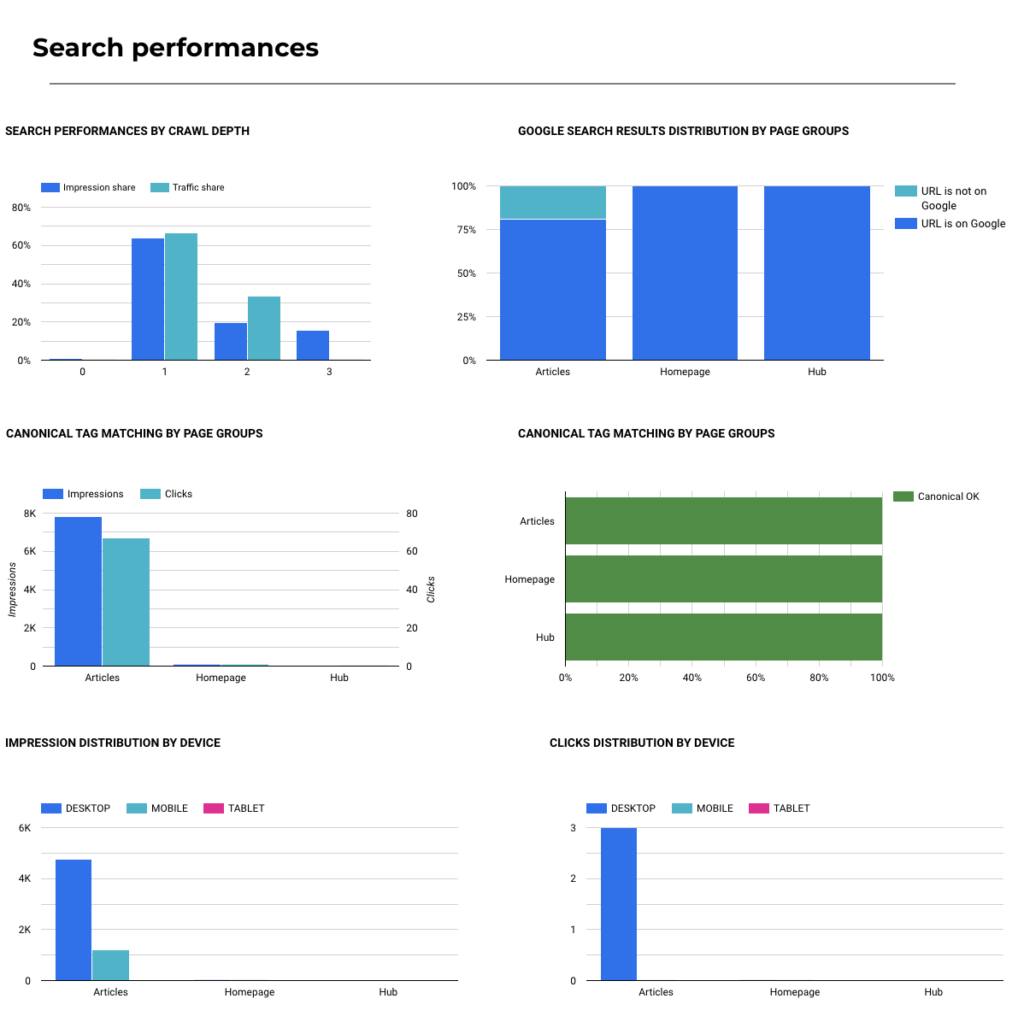

Search performances

List of data available:

- Search performances by crawl depth

- Google search results distribution by page groups

- Canonical tag matching by page groups

- Canonical tag matching by page groups

- Impression distribution by device

- Clicks distribution by device

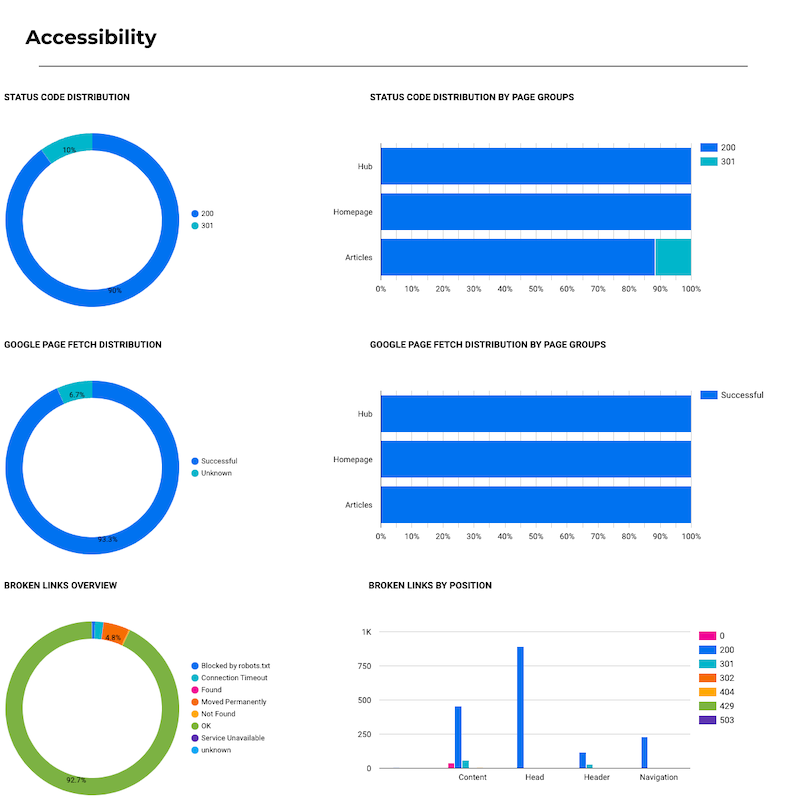

Accessibility

List of data available:

- Status code distribution

- Status code distribution by page groups

- Google page fetch distribution

- Google page fetch distribution by page groups

- Broken links overview

- Broken links by position

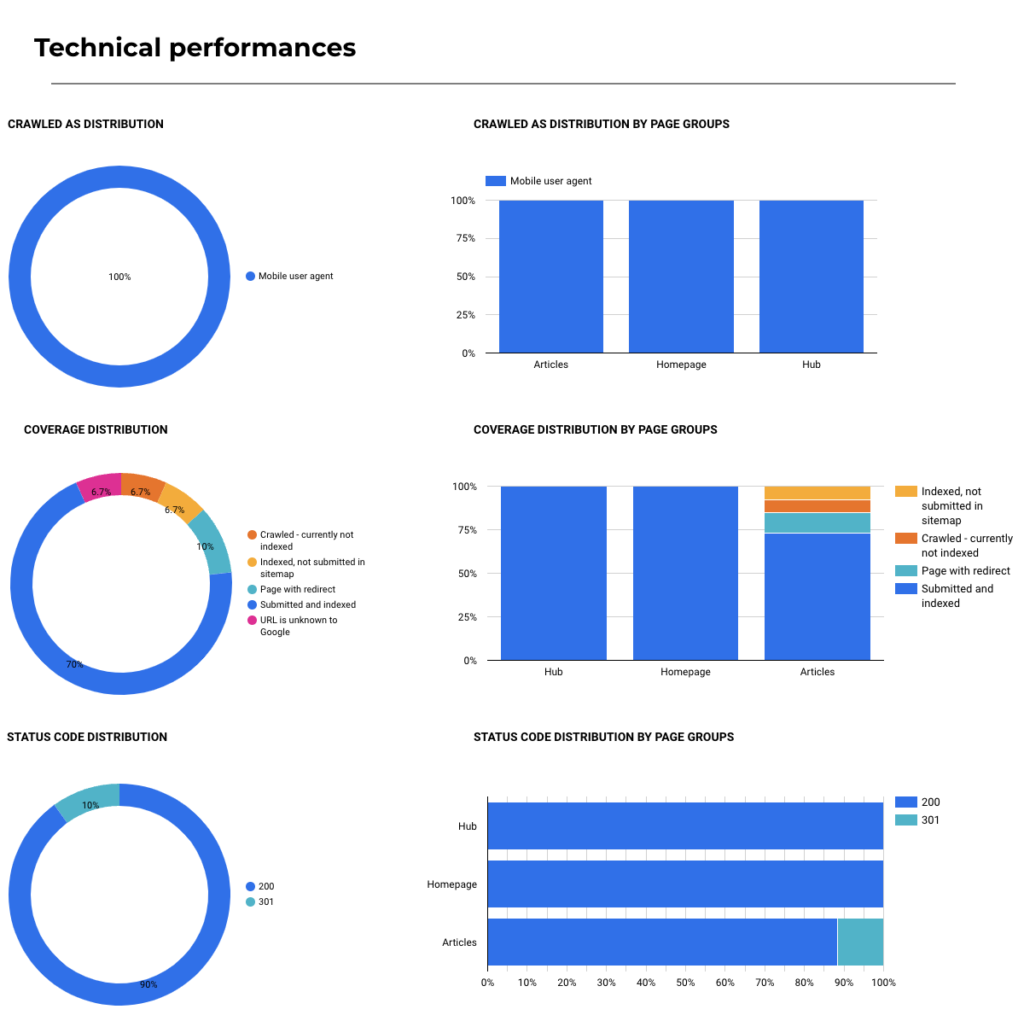

Technical performances

List of data available:

- Crawled as distribution

- Crawled as distribution by page groups

- Coverage distribution

- Coverage distribution by page groups

- Status code distribution

- Status code distribution by page groups

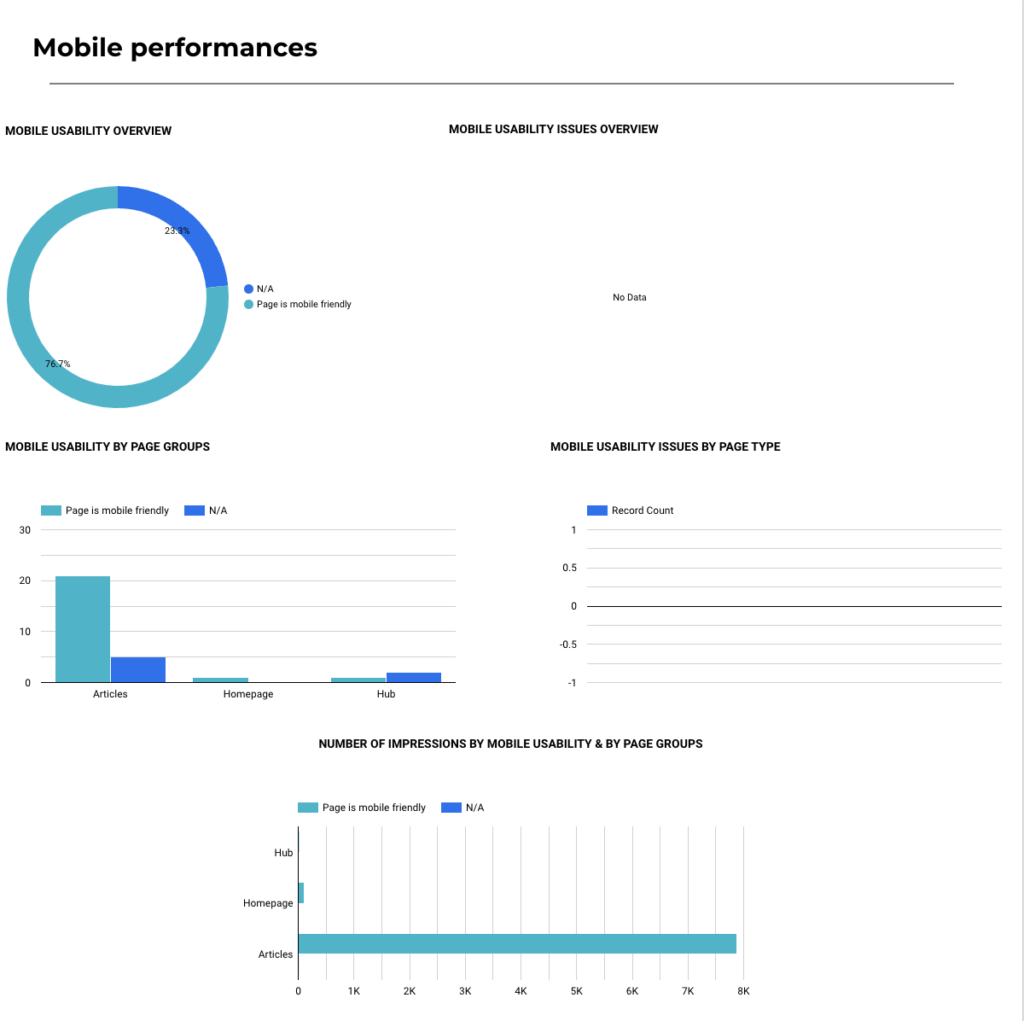

Mobile performances

List of data available:

- Mobile usability overview

- Mobile usability issues overview

- Mobile usability by page groups

- Mobile usability issues by page type

- Number of impressions by mobile usability and page groups

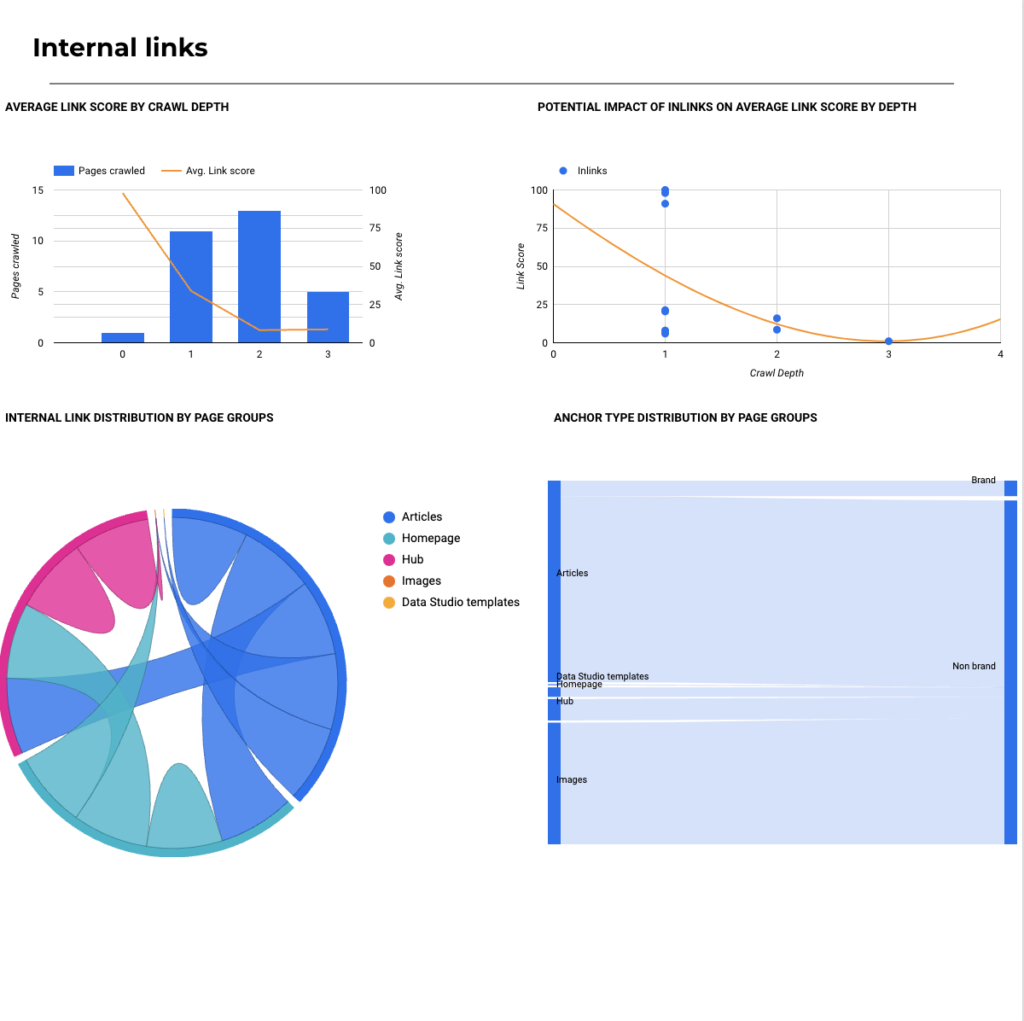

Internal links

List of data available:

- Average link score by crawl depth

- Potential impact of inlinks on average link score by crawl depth

- Internal link distribution by page groups

- Anchor type distribution by page groups

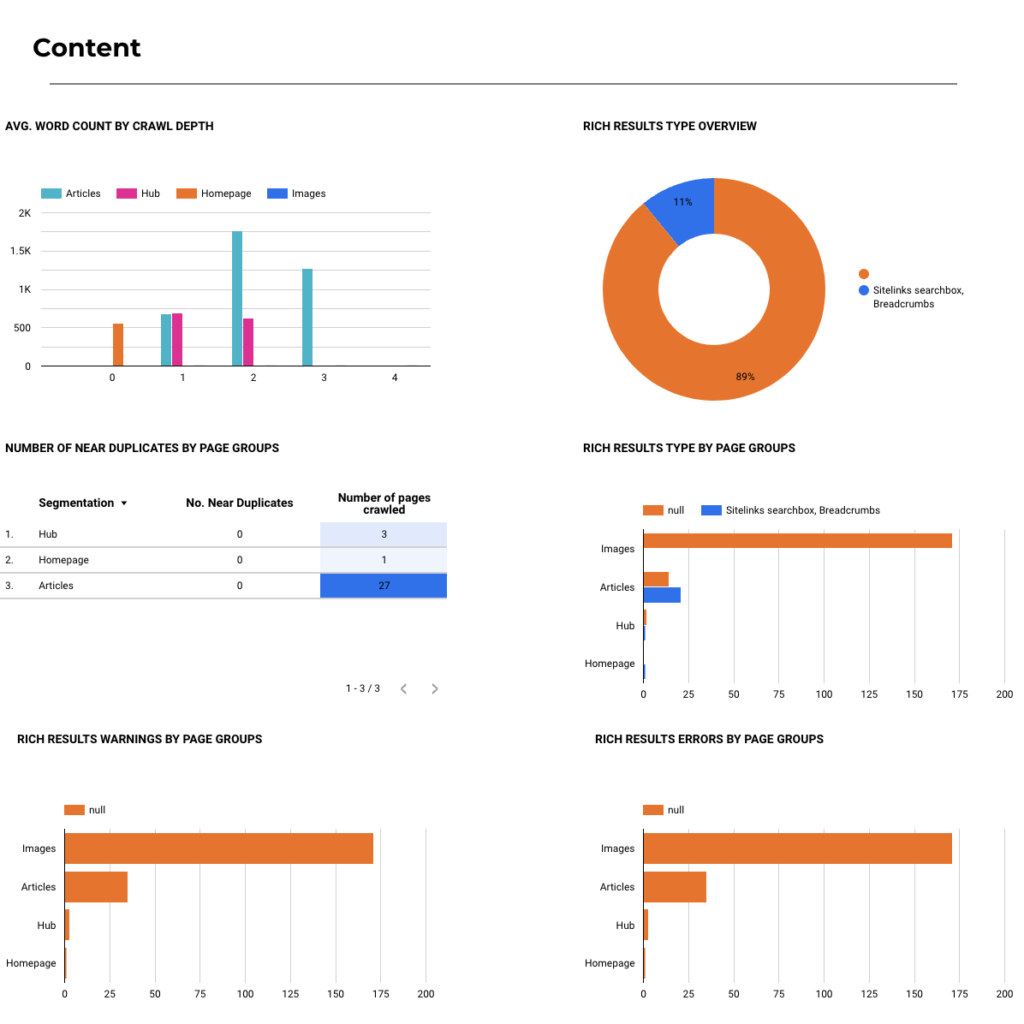

Content

List of data available:

- Average word count by crawl depth

- Rich results type overview

- Number of near duplicates by page groups

- Rich results type by page groups

- Rich results warnings by page groups

- Rich results errors by page groups

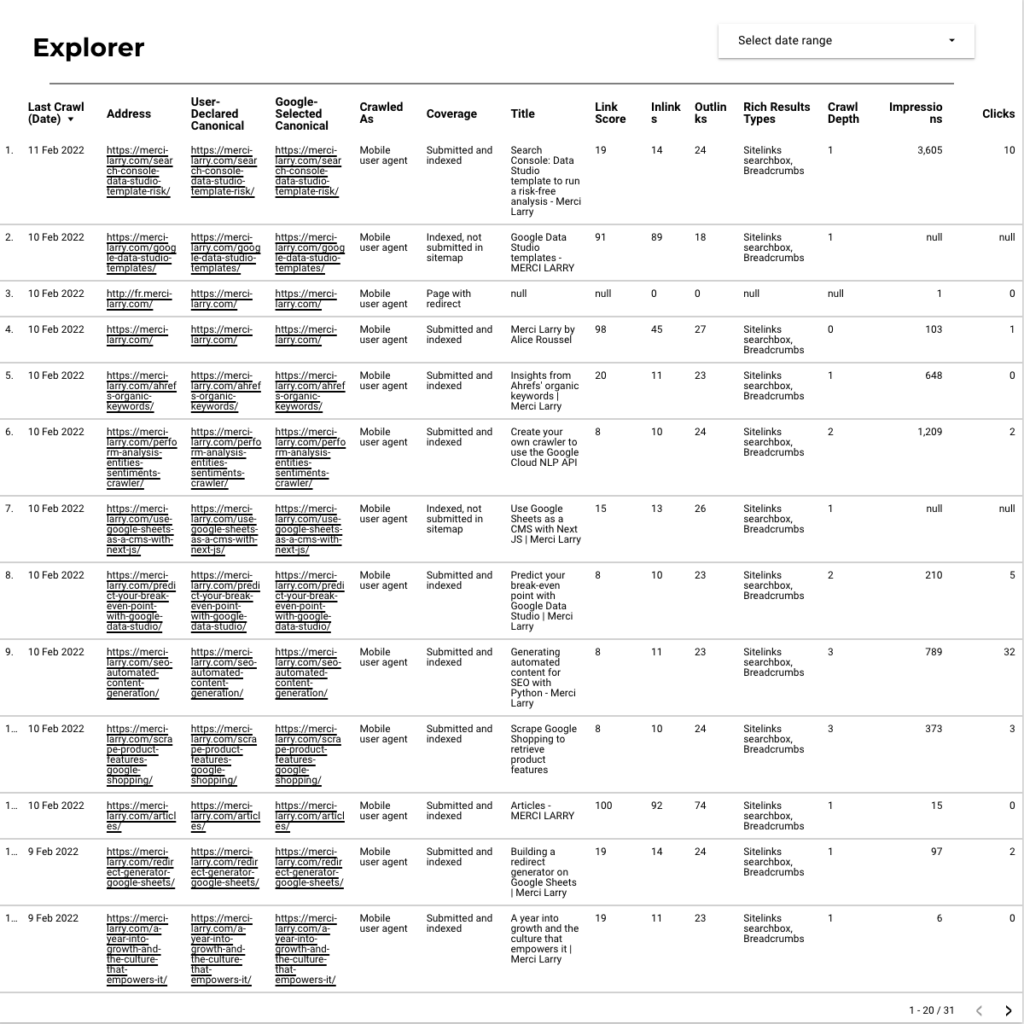

Explorer

List of data available:

- Last crawl date

- URL

- User declared canonical

- Google selected canonical

- Crawled as

- Coverage

- Title

- Link score

- Inlinks

- Outlinks

- Rich results types

- Crawl depth

- Impressions

- Clicks

URL inspection API usage limits

Keep in mind that the API has usage limits so you could get partial results depending on the number of URLs crawled.

Enjoy and share your feedbacks!

Added in the Accessibility tab:

- Broken links overview

- Broken links by position

Comments ()