Log analysis: efficiently manage your SEO actions

Without the use of log analysis, one sometimes strives to optimize a page without it being explored or too little to get tangible results. Log analysis provides information accessible nowhere else, and guides SEO actions on factual elements.

The data presented in this article are from OnCrawl. The names of the groups of pages have been modified in order to preserve the confidentiality of the website (ecommerce) but this will not prevent us from studying the logs.

First step: configuring the crawl

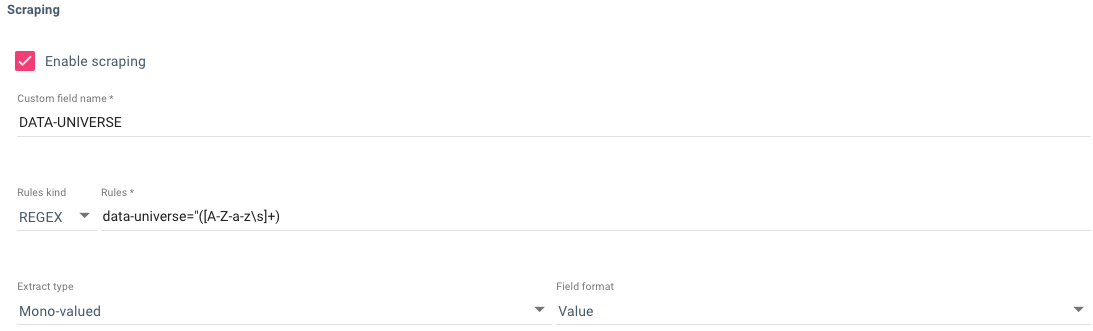

Before throwing my head down into the log analysis, I configured my crawl to isolate my groups of pages according to silos defined upstream for setting up the internal linking. Several ways exist depending on the configuration of your website. For my part, the “data-universe” field in the body allowed me to isolate my groups of pages but it is also possible to do so via the Tag Manager container for example.

I then chose to correlate my data with that of Google Analytics via the integration option. At this point, I’m not interested in linking with Majestic SEO data, because technical and editorial SEO optimizations allow us to produce results without the need to acquire links.

Crawl behavior analysis

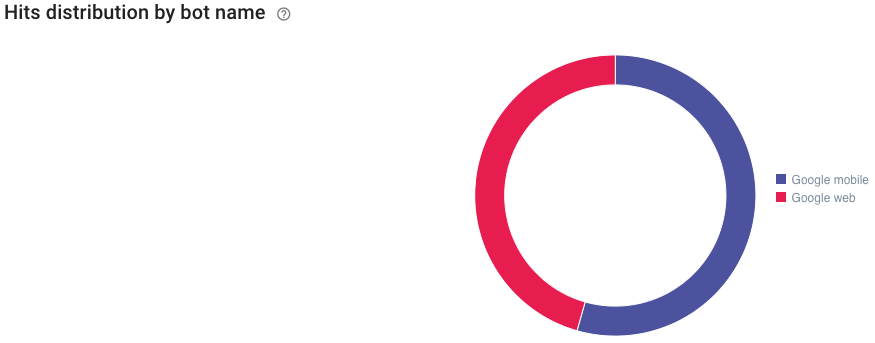

The distribution of hits allows me to know which bot browses the most my website. This is something that I am certainly interested in, in terms of the Mobile First Index currently being deployed.

It is Googlebot Mobile that explores the most my website (54.4%)! This is not necessarily good news because the loading times of the site on mobile have always been longer than on desktop. Worse still, I know that the website is a bad student because competitors have deployed better optimizations on this subject. If I hypothesize that Google is browsing all the websites in this sector of activity via Googlebot mobile, it could slow down my growth in the short term (in terms of ranking). Optimizing loading times can be a long and heavy battle for developers.

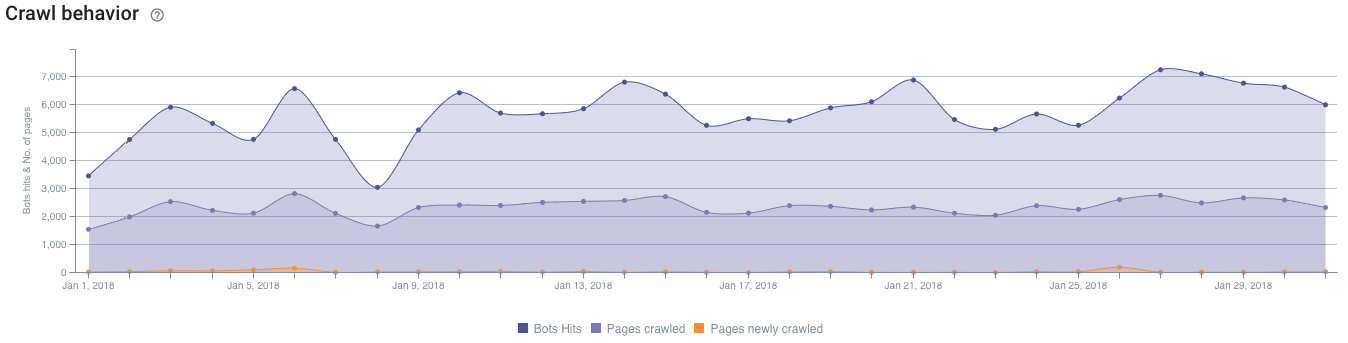

The graph dedicated to the crawl’s behavior interests me with regard to the decrease in the number of crawled pages and bots hits on January 8th. The website in question has been the target of daily hacking attempts for many weeks, and this sometimes has a significant impact on the server, causing malfunctions of the classic 500 error on the payment page, which no longer works properly.

The curve of the newly crawled pages is also flat as a flat encephalogram but we will see later on why.

Impact on SEO

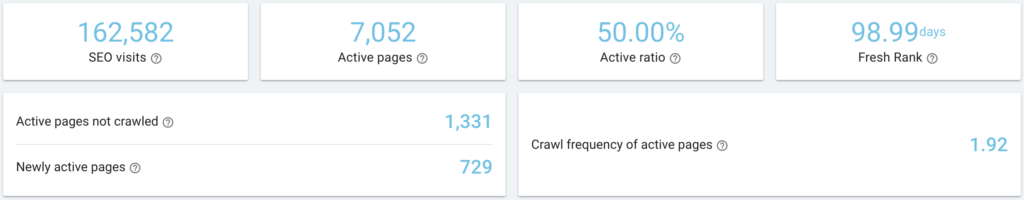

The SEO impact data is instructive and, for the purposes of my analysis, I am looking at the Fresh Rank first. A measure developed by OnCrawl that allows you to know the number of days needed before a page generates an SEO visit since its first crawl. This is about three months. This is a relatively long process, given that most of them are product pages that are added throughout the year. The supply itself does not change very much (the market has been mature for several years). The joint work with paid search engine optimization is very useful here because the synergy set up allows to activate an aggressive campaign of sponsored links the first 90 days following the addition of a product. Subsequently, sponsored links are deactivated or the campaign is less aggressive because SEO performs better in terms of visitor quality and revenue generation.

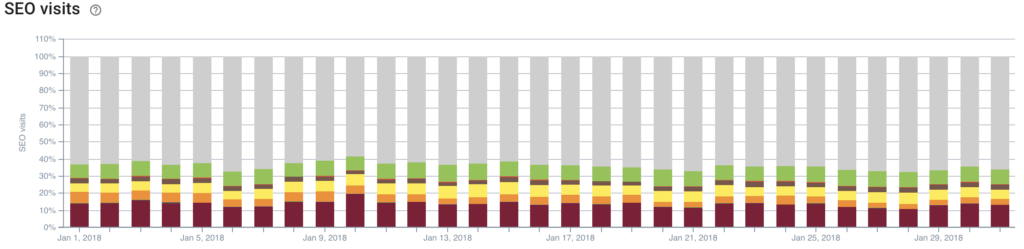

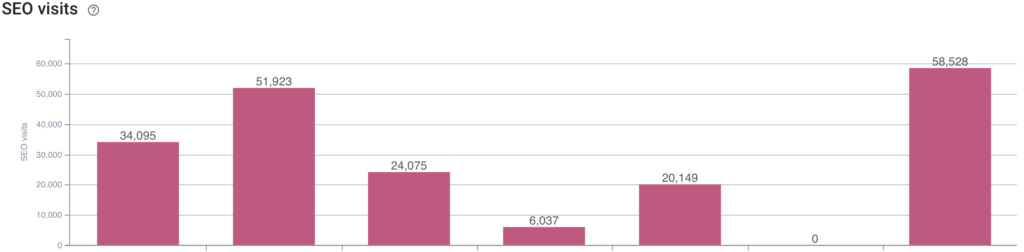

I can then analyze the SEO visits by group of pages, which allows me to confirm that it is the product pages that represent most of the natural traffic captured.

The orange, yellow and green page groups are pages on which the main keyword is highly competitive. Moreover, despite rankings varying between 3 and 8 on the first page of the search results, the active notoriety of some competitors disrupt the theoretical CTR associated with each position. The recent arrival of Amazon on the pay-side has also revamped SERP’s rules, and has sent the market back to an aggressive SEO strategy to recover lost sales through Google Shopping in particular.

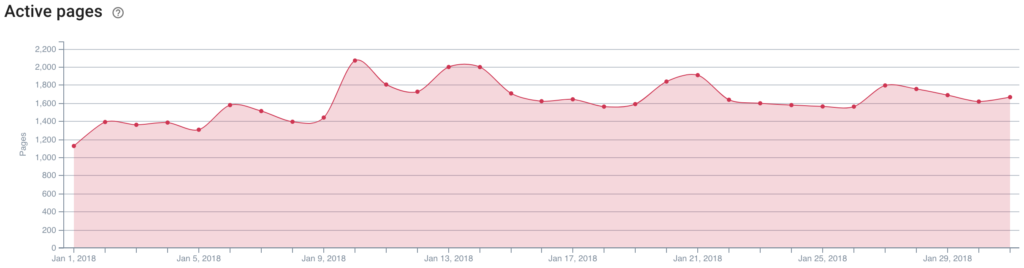

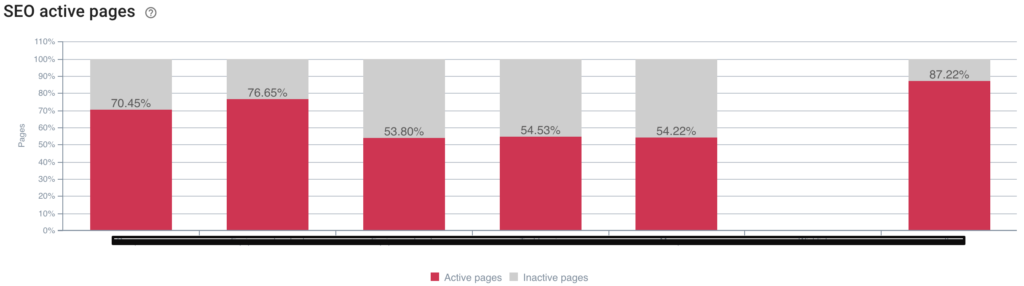

The evolution of the active pages clearly shows an evolution at the time of the balances:

Quality of exploration

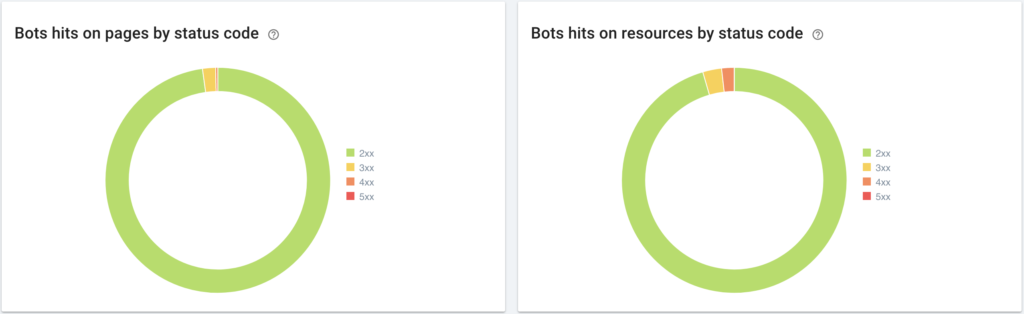

Overall, I am satisfied with the performances presented below. As the product life cycle is subject to significant changes, particularly at the end of the year and the beginning of the year, the number of 404 errors was considerable in 2016 when the SEO strategy was implemented. The implementation of rules for automatic permanent redirection of 404 errors based on a dozen or so scenarios made it possible to eradicate them. The 404 errors shown below (543 in total) concern redirections that have not yet been implemented (automation starts after 7 days, if no human intervention has been detected).

Data cross analysis with Google Analytics

Behavior of the bot

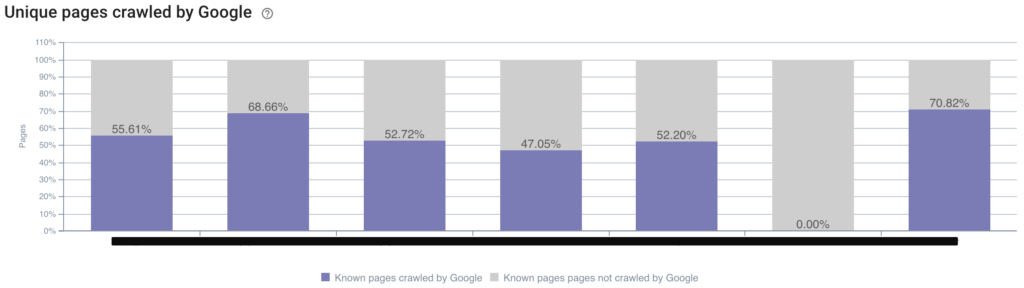

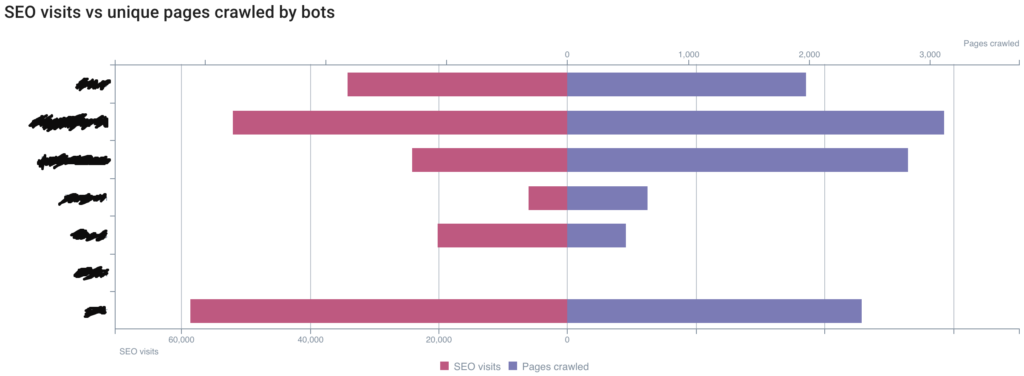

The graph speaks for itself. The SEO efforts to be produced are still substantial and it goes through the internal linking to encourage the exploration of pages that are not crawled by Google (I specify that the category that is absolutely not crawled concerns URLs generated automatically by the addition of products to a “wishlist”). The result is to be interpreted backwards.

Traffic impact

The first two lines represent my most important categories and those on which SEO actions focus (with the exception of the product pages shown in the last line). I still have many opportunities to explore.

I then have access to a finer view of SEO visits from pages known to OnCrawl and Google.

Inactive pages are relatively important on the website. Many pages do not receive SEO visits. These are the pages that potentially can be optimized “in the wind” without access to log data. It is necessary to deploy SEO actions from active pages to reduce inactive page rates.

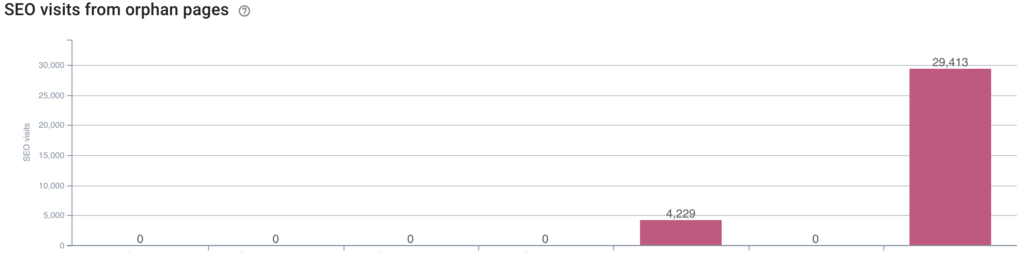

Orphan pages

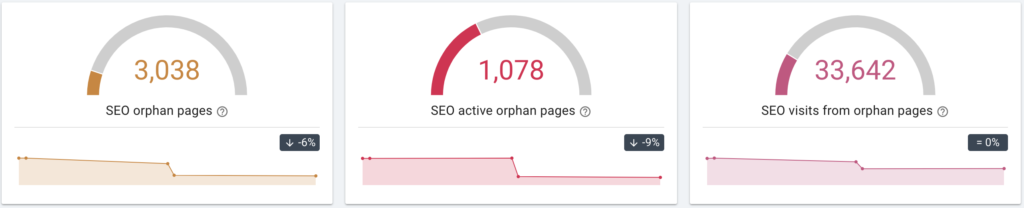

For definition, an orphan page is absent from the site structure. In other words, there is no link to it. This is a poor internal linking. We have seen above that we need to implement a more efficient internal linking, and this concerns in particular the orphan pages present in number.

I have here a more precise view of orphan pages receiving natural traffic: these are the brand pages and some product pages.

What SEO actions to implement following log analysis?

OnCrawl contains many other graphs, data processing and correlation possibilities to other data sources (including Majestic SEO) to refine the analysis. In my opinion, two major areas of work are emerging:

- Optimization of loading times.

- The implementation of an efficient internal linking strategy.

Depending on the categories and products on which it is necessary to work from a marketing point of view and depending on the data collected by page typology, I will be able to implement my optimizations in priority on the pages most likely to lead to a short-term result. OnCrawl allows you to know the details of the URLs concerned for each graph, which allows you to orient your SEO roadmap on specific pages via tangible elements.

Comments ()